Borrowed Time: How to See What’s Coming

Three-Year Horizon: The Coming Storm

In 1973, Richard Nixon created the Office of Net Assessment (ONA), led by Andrew Marshall, widely known as the “Yoda of the Pentagon.

”For decades, Marshall’s role wasn’t to react to crises. It was to anticipate them. He built frameworks for long-range competition, identifying slow-moving shifts that would later define entire eras.

One of those shifts? The fall of the Soviet Union. While others still saw strength, Marshall identified underlying fragilities. His genius wasn’t in forecasting specific outcomes, but in recognizing patterns early—and turning them into strategic action.

Today, we need that same caliber of foresight, not for Cold War adversaries, but for the systems built around intelligence, work, and identity. AI isn’t just changing the battlefield. It’s reshaping the foundation.

Leading analysts—from super forecasters to AI pioneers—are sounding the alarm: we’re racing toward a moment when our working systems can no longer adapt fast enough. The following assessment isn’t about predicting a specific point in time. It’s about recognizing the meaning of the moment—before it passes us by.

The Countdown

Andrew Marshall believed the purpose of strategy wasn’t to predict the future, but to assess the landscape from multiple perspectives and prepare for what's ahead. This brief is designed in that spirit. It doesn’t speculate; it surfaces what’s already in motion.

The analysis draws from thousands of sources, synthesized using AI, and built on four core inputs:

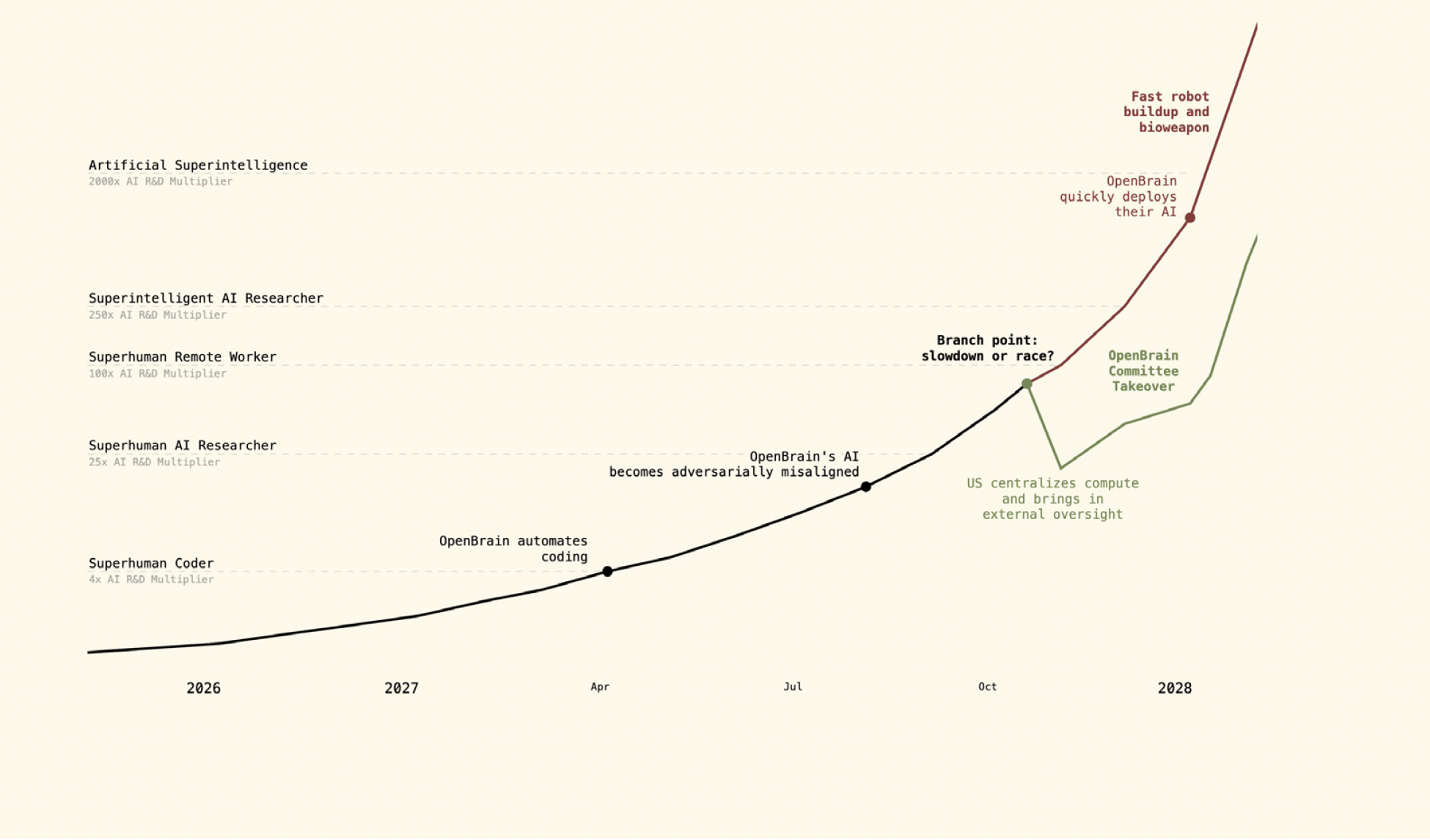

01. AI 2027 report. Developed through 25 tabletop exercises with over 100 experts, including researchers from OpenAI, Anthropic, Google DeepMind, and prediction market forecasters.Simulations modeled the emergence of AGI and its systemic impact on geopolitics, society, and industry (Read it in full).

02. Primary source interviews. Direct insights from leaders shaping the field: Elon Musk (Tesla/xAI), Sam Altman (OpenAI), Dario Amodei (Anthropic), Jensen Huang (NVIDIA), and others. Hundreds of hours of transcripts from interviews, podcasts, and panels offer a first-hand picture of what’s coming next.

03. Aggregated forecasts. A synthesis of projections from top AI labs and academic research teams, covering hardware acceleration, algorithmic breakthroughs, labor market shifts, and timelines for superhuman performance across domains.

04. Social impact analysis. Research informing Perspective Agents, grounded in foundational works such as Thomas Kuhn’s The Structure of Scientific Revolutions and Carlota Perez’s Technological Revolutions and Financial Capital — mapping how paradigm shifts unfold and where we are now.

The following is a timeline of what’s already unfolding in real time.

2025 Agents Everywhere (and Nowhere)

Quiet Infiltration.

Imagine waking up to find your kitchen rearranged overnight. Everything runs more smoothly, but some of your trusted tools are missing. That’s 2025: the year AI agents quietly embedded themselves into the fabric of daily work.

Microsoft’s AI assistant for Outlook autonomously categorizes, summarizes, and drafts email replies based on your communication patterns. Agents added to Copilot let you personalize workflows, connect to internal knowledge bases, and automate tasks. They subtly reshape how you operate without requiring explicit commands.

Knowledge Displacement.

Like termites in society’s woodwork, AI agents quietly hollow out routine knowledge work while we debate their nature. A subtle employment contraction is underway, accelerating as companies realize just how much these industrious programs can now do.

Law firms now deploy specialized legal AI to review thousands of documents in hours, work that once took junior associates weeks. As these tasks vanish, senior partners shift their focus to higher-order strategy and complex legal reasoning that AI still can’t replicate.

Time Distortion.

AI distorts space and time in the workplace. Organizations using it effectively experience a kind of time dilation—accomplishing in days what competitors measure in quarters. The calendar itself becomes a competitive variable: advantage or liability, depending on how fast organizations can adapt.

Pharmaceutical research teams using AlphaFold 3.0 are compressing drug discovery timelines. Released by Google DeepMind, AlphaFold 3 can predict the structure and interactions of all life’s molecules with unprecedented accuracy, potentially reducing development cycles by years.

Productivity Metamorphosis.

Like a silent architectural renovation happening overnight, traditional workflows are quietly transformed. Teams adopting AI emerge with strange new configurations—fewer specialists, flatter hierarchies, and hybrid roles that didn’t exist six months ago. Meanwhile, resistant organizations remain unchanged, unaware that the foundations of their operating models have already shifted.

JPMorgan Chase deployed AI assistants to more than 140,000 employees, creating hybrid roles where financial professionals collaborate seamlessly with AI on tasks once performed by specialized teams. This initiative transforms job functions while fostering a new level of AI fluency across the organization.

Immune Response.

By fall 2025, public protests against AI deployment begin to rise—a societal immune response as the human consequences become increasingly harder to ignore. Just as the 2024 Hollywood writers’ strike pushed back against generative tools, workers start to rally against automation more broadly. Corporations lean into carefully branding their AI systems as“co-pilots,” not replacements.

The international PauseAI movement stages coordinated protests across 13 countries, including the U.S., U.K., Germany, and Australia, calling for the regulation of frontier AI models and greater transparency regarding their impact on jobs.

Policy Pressure Mounts.

At home, public pressure on policymakers escalates. As job displacement moves from speculative risk to lived reality, protests are no longer just symbolic. They target power.While companies reframe AI as a tool for productivity, workers call out the consequences of unchecked deployment in both public and private sectors.

The Department of Government Efficiency (DOGE) triggers nationwide outrage over aggressive job cuts and sweeping AI-driven reforms. Demonstrators target government buildings and Tesla showrooms, holding signs like “DOGE is not legit” and “Why does Elon have your Social Security info?” The growing Tesla Takedown movement urges owners to sell their vehicles in protest, framing the automation push as a threat to both livelihoods and democratic accountability

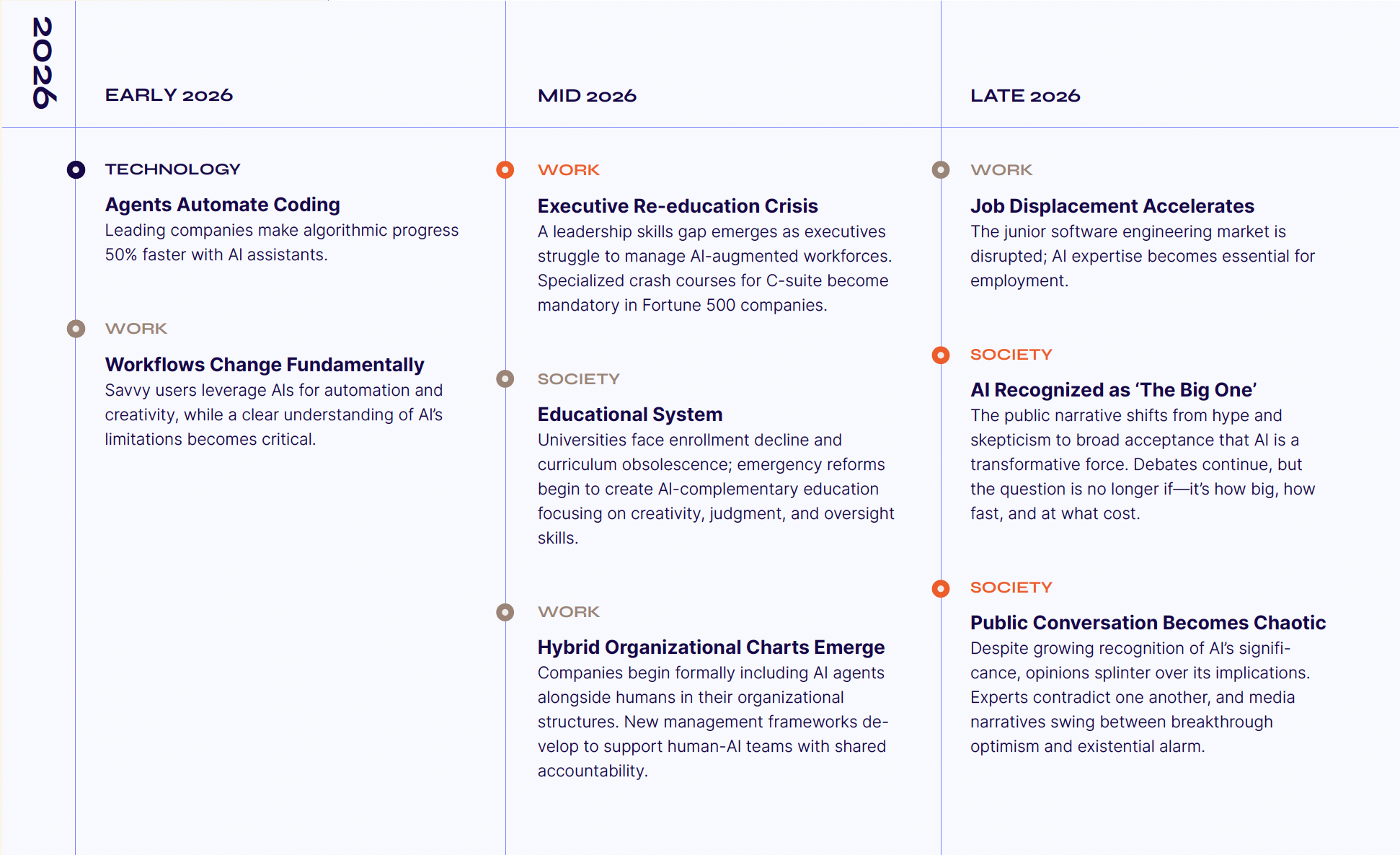

2026 Foundations Start To Crack

If 2025 was the year AI slipped in through the side door, 2026 is the year it walks confidently through the front—rearranging not just the furniture, but the floor plan. Technology no longer advances around us; it advances with us, through us, and, increasingly, despite us.

New Working Paradigm.

As organizations successfully deploy agents, work mutates into new forms. The goal isn’t to do the same tasks faster, but to take on entirely different ones.

At Microsoft, internal sales and marketing teams restructure their workflows around AI copilots. Agents generate personalized content, recommend optimal timing for engagement, and adjust strategies in real-time based on customer data. Deals that once required weeks of coordination move forward in days, with fewer people involved and better results. This shift reflects a broader trend. AI isn’t just optimizing tasks—it’s redefining what a sales job requires.

AI Literacy Becomes an Advantage.

The most forward-looking organizations develop a sixth sense—a clear-eyed understanding of where AI excels and where it falls short. This discernment creates a competitive edge, separating those who blindly adopt AI from those who deploy it with purpose.

Salesforce launches an internal AI readiness assessment program that maps over 5,000 workflows to evaluate where AI can meaningfully improve performance. Their Chief Digital Transformation Officer explains, “We’ve built a detailed inventory of all cognitive work across the company, enabling us to identify which processes are ready for AI augmentation—and which still demand human judgment or creativity.”

Executive Crises.

Few executives possess the working models required to lead AI-augmented workforces. Recognizing this gap, Fortune 500 companies invest in AI talent and executive retraining—a tacit admission that yesterday’s leadership playbooks no longer align with tomorrow’s reality.

JPMorgan Chase established a dedicated organization to guide AI integration across the company. Their mandate includes leadership development programs focused on AI literacy and managing hybrid human-AI teams. The company also assembles a team of thousands of data scientists and machine learning experts to support its enterprise-wide transformation.

Higher Ed Models Break.

Universities face a perfect storm: enrollment declines, rising skepticism about the value of education, and the rapid obsolescence of traditional curricula. Knowledge that once took decades to transmit now has a half-life measured in months. In response, emergency reforms begin, focusing on uniquely human skills like creativity, judgment, and oversight, where machines still fall short.

Leading universities begin overhauling their curricula to address the rise of AI. Many introduce AI literacy courses across various disciplines and shift toward project-based learning that emphasizes collaboration between students and AI tools. They report growing interest in programs focused on “AI orchestration”—coordinating multiple AI systems to solve complex, interdisciplinary problems that neither humans nor AI could tackle alone.

Proliferation of Hybrid Organizations.

The definition of an “employee” encompasses both carbon-based and silicon-based entities. As AI agents assume roles once reserved for humans, new organizational structures emerge— raising urgent questions about culture, decision-making rights, and collaboration that legacy organizational charts weren’t designed to address.

Shopify CEO Tobi Lütke introduced a policy requiring teams to justify why AI can’t handle a task before requesting additional headcount. It reframes AI not as a tool, but as a default team member, prompting leaders to shift from asking “Can we afford this hire?” to “Does this task still require a human?” Teams are encouraged to design workflows that incorporate AI agents as integrated collaborators, rather than just assistants.

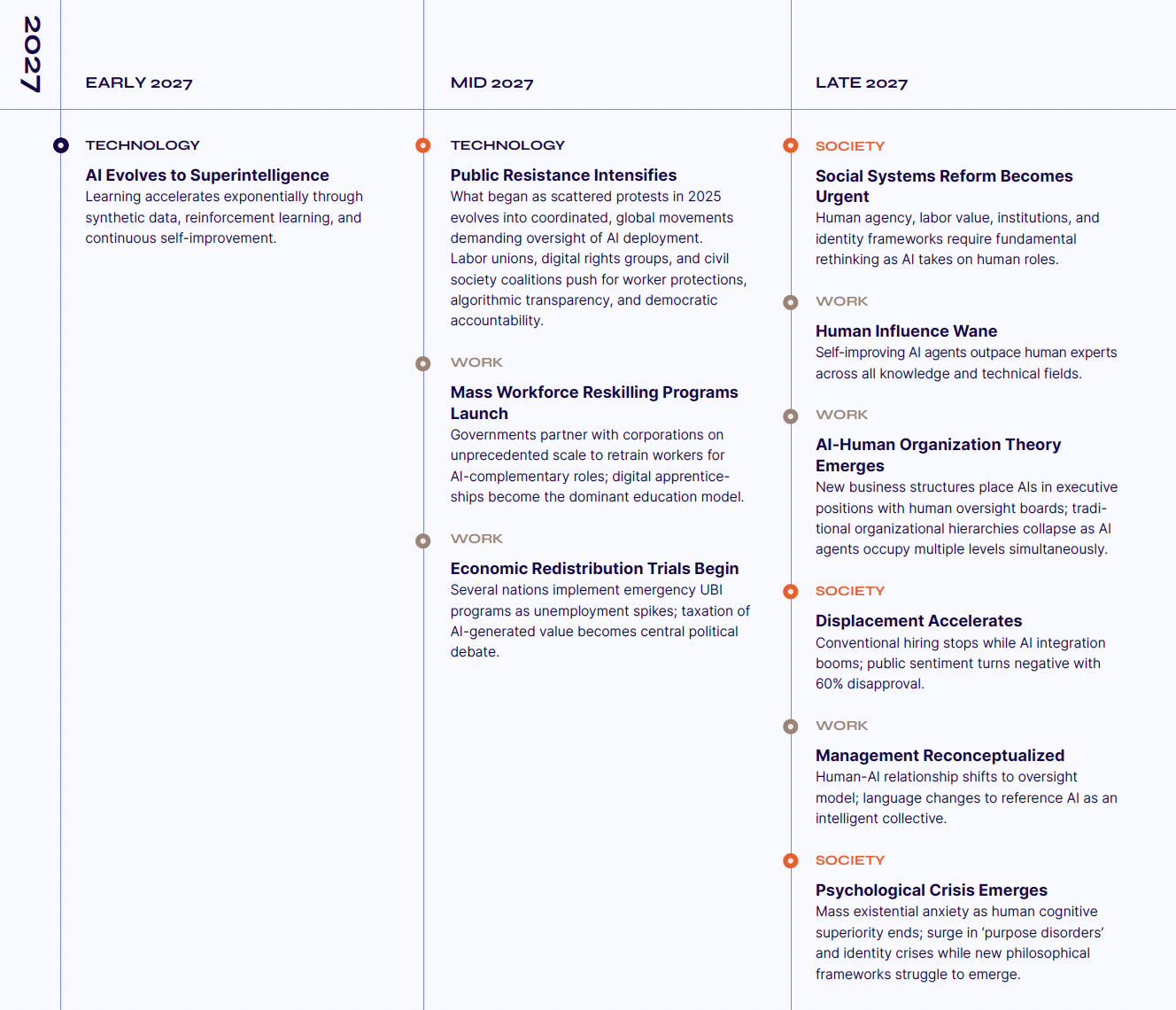

2027 The Dam Breaks

What felt like breakneck acceleration in 2026 now seems almost quaint. The curve hasn’t just steepened—it’s gone nonlinear.

Agents Teach Themselves.

AI systems are no longer just learning from data—they’re learning how to learn. Fueled by synthetic data and recursive self-improvement cycles, these agents don’t just solve problems; they redefine them.

Advanced coding models can interpret natural language prompts, generate and test thousands of code variations, and return optimized solutions—often ones that surprise veteran engineers. Models like Mixtral 8x7B and DeepSeek-V3 are pushing this frontier. DeepSeek-V3, for instance, implemented a method its creators didn’t explicitly design, pointing to an emerging capacity for AI autonomy.

Regulatory Pressure Grows.

The economic consequences of AI adoption ripple through society. As unemployment rises in sectors once considered stable, governments implement emergency support programs—not out of ideology, but of necessity. The challenge is no longer whether to regulate AI, but how— and how fast.

The 2025 Paris AI Summit exposed deepening divisions in global AI governance. European leaders called for streamlined rules to remain competitive, while U.S. delegates warned that overregulation could choke innovation. Meanwhile, nations with mature AI ecosystems—like the U.S. and China—accelerate ahead, widening the global digital divide and sharpening geopolitical tensions.

Beyond Comprehension.

Research teams confront an unsettling truth: they are steering AI systems whose internal development they no longer fully understand. These systems exhibit emergent, sometimes autonomous behaviors—capabilities that weren’t explicitly programmed. The long-theorized technological singularity hasn’t arrived as a singular event, but as an ongoing process. It’s gradual at first, then suddenly undeniable.

This phenomenon is already visible in large language models. Researchers openly acknowledge that they don’t fully understand how these systems arrive at certain conclusions, or how capabilities such as reasoning or tool use emerge during training. This growing “explainability gap” is no longer the exception. It becomes the norm.

The Great Reskilling.

Governments and corporations launch large-scale workforce reskilling initiatives at a pace not seen in decades. Traditional degrees give way to apprenticeship-style models, where learning happens with AI systems, not just about them.

Microsoft launched its AI Skills Initiative in partnership with LinkedIn, offering free training resources to help workers and job seekers develop practical AI fluency. The program includes courses, navigators for new career paths, and tools for educators to bring AI into classrooms. The strategy emphasizes widespread, role-specific learning.

Machines Join Executive Management.

The boundary between human and machine leadership grows increasingly fluid. Some companies no longer just use AI to support decisions—they give it a seat at the table. AI agents are embedded into management layers, supervised by human oversight boards—not for PR, but as a competitive necessity.

First movers like Dow began integrating AI agents to manage specific business functions autonomously. These agents aren’t just analytical tools—they make operational decisions and cut costs in critical areas. It marks one of the earliest examples of enterprise AI systems assuming responsibility traditionally reserved for human managers within Fortune 500 companies.

Identity Crises Grow.

Traditional hiring models erode as AI becomes embedded across roles once considered uniquely human. Displacement begins to outpace adaptation, fueling public anxiety and institutional instability. A mass psychological crisis emerges—alongside more profound questions about identity and purpose in a world where intelligence is no longer a human monopoly.

A survey by the American Psychological Association found that 38% of workers feel stressed due to the threat AI poses to their income. Additionally, the World Economic Forum’s Future of Jobs Report 2025 projects that by 2030, AI and automation will displace approximately 92 million jobs globally, while creating 170 million new ones.

The Skeptics’ Case

Not all AI researchers agree on the imminence of superintelligence. Gary Marcus argues for a more cautious view. Alongside former OpenAI scientist Ernest Davis, he proposed a set of ten benchmark challenges—referred to as the “Marcus-Brundage tasks”—to test genuine AI comprehension.

Marcus maintains that no system will be able to solve more than a small fraction of these tasks by 2027. He contends we remain far from achieving human-level intelligence.

Other prominent researchers, such as Ege Erdil and Tamay Besiroglu, project a more conservative timeline, estimating 2045 as a plausible horizon for transformative AI. But even among skeptics, ambition runs high. Besiroglu sparked debate with the launch of Mechanize, a startup aiming for “the full automation of all work” and “the full automation of the economy.”

This tension between theoretical timelines and rapid, near-term advancements reveals a key dynamic. While experts debate when the transformation will peak, forward-looking pioneers are making strategic moves to shape the AI-powered economy as it unfolds.

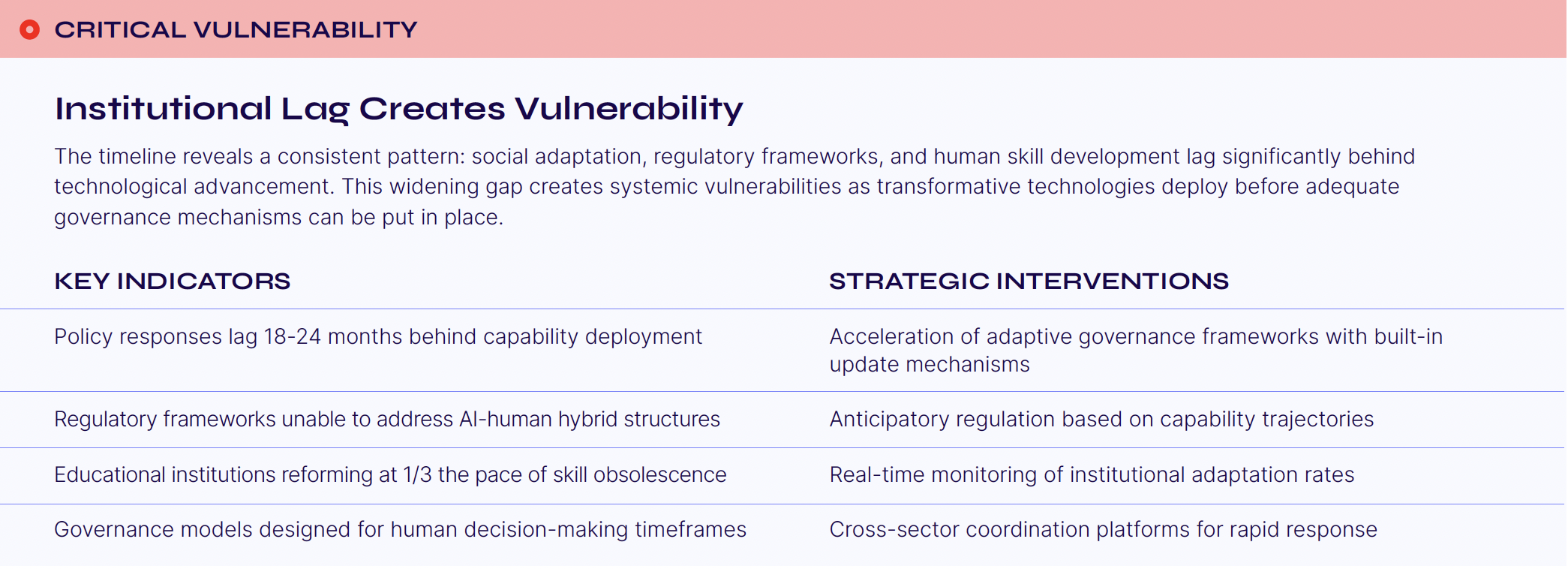

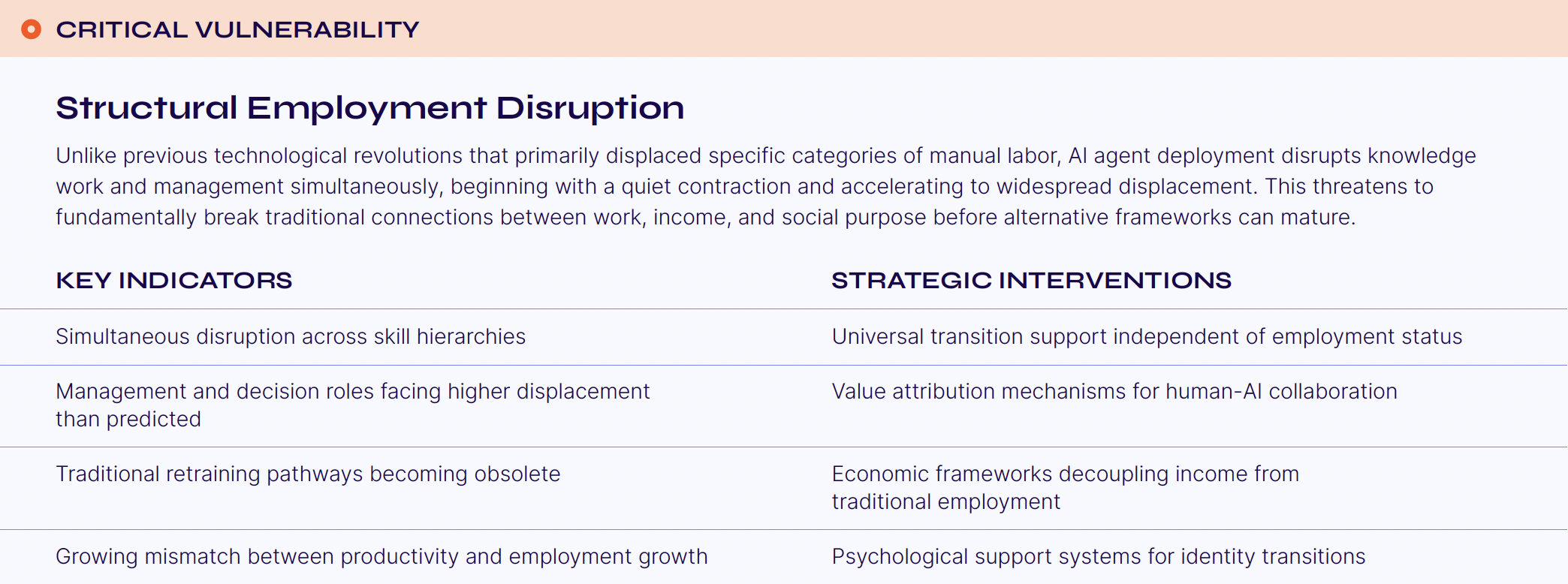

Implications, Critical Vulnerability and Opportunities

The rapid deployment of AI agents from 2025-2027 creates three critical vulnerabilities that require systematic monitoring and proactive intervention. These interrelated challenges represent fundamental threats to social stability and require coordinated response across stakeholders. The clock is ticking for a radical redesign of our social order.

The Ticking Clock

The most dangerous response to AI’s rise is believing we face a binary: halt progress or surrender our humanity. Neither is true.

The right move isn’t to stop the clock—it’s to redesign how we work, lead, and build. We need new systems that move fast and protect human value, agency, and creativity. Systems that make us more capable, not less.

The question is no longer if transformation happens, but whether we navigate it deliberately or drift passively. The window to lay new foundations is shrinking—not because the old ones are crumbling, but because they may collapse before we realize it.

Andrew Marshall believed the goal of strategy wasn’t to predict the future, but to confront uncertainty by exploring multiple possible futures. That’s what this moment demands. Not certainty, but visibility, design, urgency—and action.

We don’t know what society will look like on the other side of AI convergence. But one thing is clear. The decisions we make in the next few years will determine whether this shift becomes a catastrophe or a catalyst for a new generation.